The AI Doom Industrial Complex: How the Left is Using Technophobia to Centralize Power

A couple of days ago, Dario Amodei, co-founder of Anthropic AI, made a sensationalist claim about the looming consequences of artificial intelligence. According to Amodei, we are heading toward a future where 20% of all jobs vanish, with one in two white-collar positions destined for extinction. While the scale and specificity of this prediction demand serious scrutiny, what stands out is not the empirical rigor of Amodei's argument but rather the familiar pattern of fearmongering masquerading as sober futurism.

This is far from the first time Anthropic and its leadership have steered public discourse toward AI doomerism. The company has actively lobbied against deregulatory measures proposed under the Trump administration that aimed to liberate American firms from the suffocating grip of local bureaucracies.

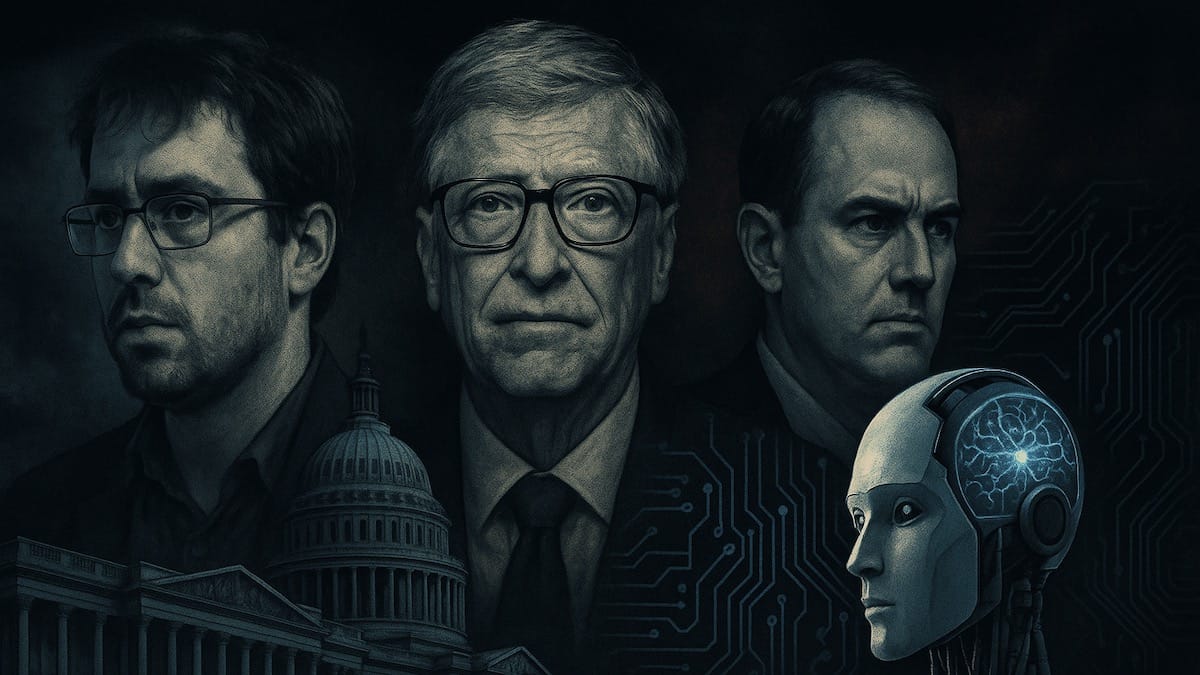

Echoing Amodei’s alarmism, Bill Gates recently claimed that AI could render human doctors and teachers obsolete. This, too, is deeply speculative—but more revealing when viewed in light of Gates’ broader ideological commitments. This is the same figure who forcefully championed prolonged lockdowns and vaccine mandates during the COVID-19 pandemic. The consistent thread here is a technocratic impulse dressed in humanitarian language—a paternalistic instinct to guide the public not through innovation and empowerment, but through control and managed decline. These proclamations about AI-induced mass unemployment are less about forecasting and more about laying the intellectual groundwork for a top-down regulatory regime.

What we’re witnessing is the solidification of an AI doomerism industrial complex. This complex doesn’t merely exist in academic journals or speculative think pieces—it has extended its tentacles deep into the machinery of government and media, finding its natural habitat within the progressive bureaucratic state. The clearest illustration of this symbiosis is the revolving door between Democrat-aligned policy networks and institutional AI firms like Anthropic.

Take, for example, Elizabeth Kelly, who led the AI Safety Institute under the Biden administration. Her mission was to avert the existential threats posed by runaway AI development—an endeavor that sounded more like a plotline from dystopian science fiction than a grounded policy initiative.

After her government tenure, Kelly transitioned seamlessly into the private sector, joining Anthropic. Similarly, Tarun Chhabra, formerly Deputy Assistant to the President and Coordinator for Technology and National Security, also landed at Anthropic after his government service. These appointments aren’t coincidental. They’re symptomatic of a broader ideological convergence between left-leaning political operatives and institutional technology gatekeepers—actors who see AI not primarily as a tool for liberation and productivity but as a threat requiring centralized oversight and preemptive regulation.

This revolving door mirrors patterns we've seen in other sectors dominated by technocratic elites. Just as the climate change narrative empowered a sprawling regulatory regime, AI doomerism is poised to legitimize a new techno-bureaucracy—staffed by the same class of people who rotate between NGOs, academia, legacy tech firms, and Democratic policy shops. At its core, this class does not aim to innovate but to administrate, to establish itself as the indispensable interpreter and controller of emergent technologies.

To understand the intellectual and financial engine behind this alignment, one must look at Open Philanthropy. Directed by Holden Karnofsky—who happens to be the brother-in-law of Dario Amodei—Open Philanthropy has positioned itself as the quiet puppeteer behind much of the AI existential-risk narrative. The organization has poured hundreds of millions into research institutes, lobbying groups, university programs, and corporate initiatives that propagate speculative ideologies. These include alarmist scenarios of human extinction, runaway superintelligence, and the moral imperative of "AI alignment"—concepts largely decoupled from current technological realities but deeply effective at justifying preemptive restrictions.

Open Philanthropy’s grants have shaped entire academic disciplines. From funding AI policy centers at elite universities to underwriting Effective Altruism-aligned think tanks, the organization is not just funding science—it is manufacturing consensus. Its financial influence reaches into the journalistic and governmental spheres as well, often indirectly. Media narratives that echo AI panic frequently cite “researchers” or “policy experts” from institutions sustained by Open Philanthropy money. Meanwhile, the same pool of ideologically aligned individuals regularly finds its way into advisory roles at federal agencies and multilateral bodies.

Perhaps most insidiously, Open Philanthropy has been a vocal proponent of global compute governance—a euphemism for supranational control over access to advanced AI infrastructure. This proposal doesn’t merely aim to coordinate or regulate AI development; it envisions a bureaucratic choke point for technological progress itself. In the name of “safety,” it would transfer power from private innovators and national governments to transnational oversight bodies populated by credentialed ideologues. This is not regulation—it is preemption masquerading as precaution.

The very people peddling doomsday scenarios are often those angling for control over the solution. The regulatory prescriptions they offer are always exhaustive: 200-page diffusion rules, 100-page executive orders. These are not the blueprints of innovation but of administrative domination. Rather than encouraging a vibrant and decentralized ecosystem, the left-wing AI consensus aims to institutionalize bottlenecks and gatekeeping mechanisms under the guise of safety.

In contrast, figures like David Sacks and Marc Andreessen represent a competing philosophy—one increasingly embraced by the Trump administration—that champions technological sovereignty, market dynamism, and strategic competition. Sacks, in particular, has emerged not only as a prominent venture capitalist but also as a kind of AI diplomat for the post-liberal right, offering a vision of AI policy aligned with Trump-era deregulatory instincts. Under this model, the goal is clear: position America to win the AI race not by retreating into precautionary paralysis, but by out-building geopolitical adversaries like China. Sacks has consistently argued that the best defense against authoritarian AI regimes is not supranational ethics boards or global “compute governance,” but a thriving domestic AI ecosystem grounded in constitutional liberties and entrepreneurial energy. Where the Biden-aligned consensus seeks international guardrails, Sacks pushes for bilateral and plurilateral cooperation among innovation-aligned allies—avoiding the trap of global governance structures that can become tools of political or ideological capture. His position mirrors a broader Trumpian view: American leadership in AI must be secured through strength, speed, and self-reliance—not moral panic and bureaucratic creep.

This philosophical divide cuts to the heart of the cultural war surrounding AI. It is not merely a disagreement over technical risks—it is a conflict between visions of society. One vision sees people as capable of agency and resilience; the other sees them as fragile entities in need of perpetual guardianship. In this sense, AI doomerism is just the latest incarnation of an older political impulse: the drive to centralize power under the pretext of crisis.

The precedent is clear. We saw similar narratives during the COVID-19 pandemic, when public health officials, aided by corporate media, implemented sweeping policies based on dubious models and worst-case projections. Those decisions caused massive social and economic disruption, justified by a blend of moral panic and bureaucratic certainty. AI doomerism replicates this formula almost exactly: exaggerated forecasts, elite consensus, media amplification, and policy responses that entrench, rather than challenge, the status quo.

Moreover, this pattern serves a strategic function. By presenting AI as an unmanageable risk, the institutional left justifies retaining and expanding administrative power at a time when their traditional coalitions are fraying. It allows them to sidestep difficult questions about economic stagnation, social decay, and geopolitical weakness by projecting these anxieties onto a technological scapegoat. Rather than reforming education, healthcare, or the labor market, they offer us committees on AI alignment and ethics review boards—staffed by the same insiders who failed in those other domains.

In the final analysis, AI doomerism is not merely a misguided policy framework—it is a profound expression of cultural pessimism. It reflects a lack of confidence in the ability of free individuals to shape the future. It is telling that the same voices sounding the alarm on AI are also those who view population growth, economic expansion, and technological acceleration as inherently suspect or dangerous. What they seek is not merely safety, but stasis—a tightly managed world immune to disruption, even if it means sacrificing prosperity and progress.

To counter this narrative, we need more than technical rebuttals—we need a cultural renewal. We must recover the spirit of civilizational ambition that sees AI not as a threat but as a frontier. We must champion a model of governance that protects rights without smothering innovation. And most importantly, we must break the revolving door between political power and tech regulation—ensuring that those who seek to control the future are not the same ones who most fear it. Only then can we resist the seduction of techno-doomerism and reclaim a future that is not dictated from above but built from below—by creators, thinkers, and builders unafraid of the new.

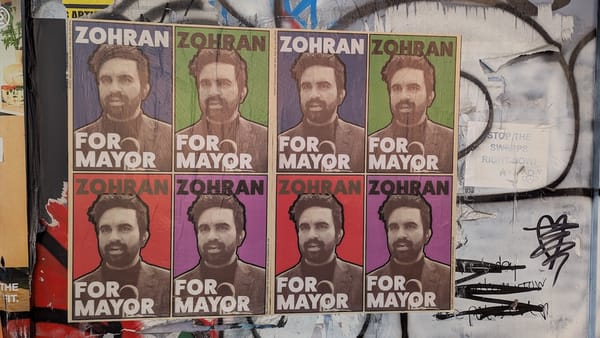

Ziya H. is a Liberty Affair contributor. He lives in Warsaw, Poland. Follow him on X @Hsnlizi.